The Ethical Challenges and Biases of AI in Medical Imaging

While Artificial Intelligence holds immense promise for improving medical imaging, its development and implementation come with a host of complex ethical challenges. As we integrate these powerful tools into clinical practice, it is crucial that developers, clinicians, and regulators carefully consider the potential pitfalls to ensure that AI is used safely, equitably, and responsibly.

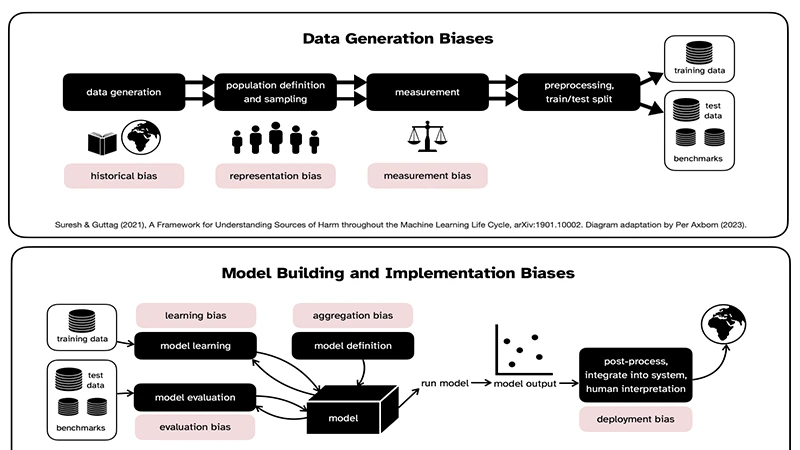

1. The Problem of Algorithmic Bias

An AI model is only as good as the data it is trained on. If the training data is not diverse and representative of the real-world patient population, the algorithm can develop significant biases.

- Demographic Bias: If an AI is trained primarily on data from one demographic (e.g., a specific ethnicity or gender), it may perform less accurately on underrepresented groups. For example, a skin cancer detection algorithm trained on light-skinned individuals may fail to correctly identify lesions on darker skin tones.

- Institutional Bias: An algorithm trained on data from a single hospital may learn to recognize patterns specific to that hospital's scanners or patient population. It may perform poorly when deployed at a different hospital with different equipment or demographics.

Addressing bias requires a conscious effort to curate large, diverse, and multi-institutional datasets for training and validation.

2. The "Black Box" Problem

Many advanced deep learning models are considered "black boxes." This means that while the AI can make a highly accurate prediction, it cannot explain *why* it made that decision in a way that humans can understand. It learns complex patterns that don't always correspond to traditional medical reasoning.

- Lack of Trust: It can be difficult for a physician to trust and act on a recommendation from a system that cannot explain its reasoning.

- Hidden Flaws: If the model is making errors, its "black box" nature can make it very difficult to understand and correct the underlying problem. Researchers are actively working on "explainable AI" (XAI) to make these models more transparent.

3. Data Privacy and Security

Training a robust AI model requires access to enormous amounts of medical data, all of which is highly sensitive Protected Health Information (PHI).

- Anonymization: Ensuring that all datasets used for training are properly and irreversibly anonymized is a massive technical and logistical challenge.

- Security: The systems that house this data and run the AI models must be protected with the highest levels of cybersecurity to prevent breaches.

4. Accountability and Responsibility

This is one of the most significant legal and ethical questions: if an AI-assisted diagnosis is wrong and a patient is harmed, who is responsible?

- Is it the physician who accepted the AI's recommendation?

- Is it the hospital that purchased and implemented the software?

- Is it the company that developed and sold the algorithm?

Clear regulatory frameworks and legal precedents are still being developed to address this new frontier of medical liability. For now, the consensus is that the AI is a tool, and the ultimate responsibility remains with the human clinician who makes the final diagnostic decision.

Conclusion: Proceeding with Caution

The ethical challenges of AI in radiology are not reasons to abandon the technology, but they are critical guideposts for its responsible development. By actively working to mitigate bias, improve transparency, protect privacy, and establish clear lines of accountability, the medical community can ensure that AI is integrated into the field in a way that is not only powerful but also fair, safe, and trustworthy for all patients.

Comments